Chatbots are designed to provide fast and efficient responses to user queries, but this technology is far from perfect and ripe for abuse. Here are the biggest ChatGPT flaws and how hackers and spammers are abusing AI technology and using online chatbots for illegal activity.

Table of Contents[Hide][Show]

What Is ChatGPT?

ChatGPT is an advanced language model, developed by the company OpenAI to simulate human dialogue. It is able to store past conversations and has the capacity to self-correct when incorrect.

ChatGPT has a human-like writing style and was trained with a comprehensive collection of Internet inputs including Wikipedia, blog posts, books, and academic articles.

Using ChatGPT is easy. You just type a question or command and the AI responds. But online chatbots are easily manipulated and abused as we detail below.

ChatGPT Is Often Wrong

For an artificial “intelligence”, ChatGPT sure is wrong… a lot.

Chatbots like ChatGPT have demonstrated an inability to accurately solve basic mathematics, address logical inquiries, and even accurately provide basic information.

The tech website CNET famously used AI to write articles with incorrect information. They foolishly used AI to write an article explaining how loans work. The AI was completely wrong and to the outrage of journalists everywhere, the CNET editorial staff was too lazy to double-check the AI for accuracy. The situation created a PR nightmare for CNET and is now is a case study on how not to use AI tools like chatbots in journalism.

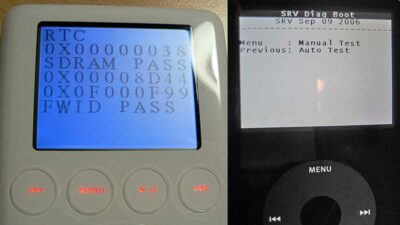

Unlike other AI-based technology, like Alexa and Siri, ChatGPT isn’t using data feeds from the Internet to answer user questions. Rather than generate a complete answer, ChatGPT produces one “token” of data at a time based on its training. That’s why ChatGPT slowly types its responses (which is a really cool effect). But the way that ChatGPT connects data tokens can lead to the platform “guessing” answers instead of factually answering them.

One of the last places that you want a guess or wrong answer, is at the doctor’s office. User’s who think that ChatGPT’s medical advice is better than a human doctor could end up causing serious harm to themselves or others.

The 11 Biggest ChatGPT Flaws And Why You Should Never Completely Trust Online Chatbots Share on XPromoting Bias

- ChatGPT is powered by considerable amounts of text data, which can be subject to potentially prejudicial content. As a result, the model’s outputs may reflect and even strengthen such biases.

Another major ChatGPT flaw is bias. Chatbot algorithms are often trained on datasets that reflect the prejudices of the input from their creators. This can lead to chatbots that are biased against certain groups of people or that propagate discrimination and prejudice.

Chatbots can also be biased towards providing certain answers or in their ability to understand certain conversations. ChatGPT has been trained on an expansive data set written by humans throughout history. Unfortunately, this means that the same potential biases found in the origional text may be reflected in the AI generated language models.

It is important to note that bias can be difficult to detect and should be taken into consideration when deploying chatbots.

Spreading Misinformation

- ChatGPT can create text that appears to have been composed by a person, enabling it to be utilized to propagate misinformation.

Though chatbots are designed to provide accurate information, they are often limited in their understanding of the data they are given. This can lead to the dissemination of misinformation, another major ChatGPT flaw. A chatbot may answer questions based on outdated or incorrect information and could even lead to misdirection.

As chatbots become more popular, it’s important to consider the accuracy of the information they provide and ensure that they are offering the most up-to-date, accurate information.

ChatGPT Flaws: Chatbots are limited by the data they are given. This can create bias and spread misinformation. #ChatGPT #ChatbotFlaws #OnlineChatbotSafety #AI #Chatbots #Misinformation #AIbias Share on XPlagiarism & Cheating

- Plagiarism isn’t a new problem in the education industry. However, the introduction of ChatGPT has revolutionized the use of AI for the creation of all types of content, including homework. AI tools will continue to make things challenging for educators trying to distinguish between plagiarized, AI-generated, and original student made material.

Have you noticed how ChatGPT starts to get overloaded every weekday around 3pm? As soon as school is out, thousands of kids flock to chatbot AI platforms to “do their homework”. This is a big ChatGPT flaw, but it’s hard to blame these kids. What would you do if there was an online chatbot capable of doing your homework for you?

As AI tools like ChatGPT become more accessible, it will be virtually impossible for the education industry to filter out what work was done by students or chatbots.

It will be interesting to see how the education industry responds.

ChatGPT Flaws: Kids are cheating at school and using chatbots to do their homework. #cheating #chatbots #ai #homework #chatgpt #education #chatgpt Share on XJob Displacement

- Language models have the ability to automate common tasks. As AI tools get more sophisticated, they will undoubtedly eliminate human jobs.

One of the biggest issues with chatbot technology is job displacement. The introduction of chatbots to business processes will increasingly becoming a threat to jobs.

Publishers like McClatchy, have already started experimenting with job automation and replacing journalists with artificial intelligence platforms. They are even giving their AI reporters stupid names like NANDOBOT.

NOTE: McClatchy, a major US media company, has laid off hundreds of journalists in recent years due to decline in print advertising and shift towards digital news consumption. The company has been restructuring and streamlining its operations to stay competitive in the face of these challenges, resulting in significant job loss for many journalists who worked for McClatchy’s newspapers.

Inflexibility In Response

- Chatbots are intelligent, but they are still just an “artificial” intelligence incapable of detecting human emotion and adjusting its responses accordingly.

One of the most glaring ChatGPT flaws is its inability to grasp the complexity of human language and tone in conversations. AI is simply programmed to produce words based on a given input. It is incapable of comprehending the implications behind the words it provides in its output.

Since AI can’t accurately detect human emotion (yet), a chatbot is unable to recognize when the conversation has taken an unexpected turn, recognize synonyms, or provide appropriate follow-up responses. Without this ability, AI can create a frustrating experience for emotional users. This is especially true if AI is being used as part of a chatbots for customer service business strategy.

Just try to imagine what it would be like to have an artificial intelligence tell you that a close family member had died in an accident. It would be like finding out that your parents had died from a robocall.

Invading Privacy & Accessing Private Information

- ChatGPT and other language models are able to leverage large amounts of data, which can sometimes include personal information about individuals. There are valid concerns regarding the methods used to collect, employ and safeguard such data.

The information being feed to chatbots can sometimes contain personal information about individuals, such as names, contact details, and preferences. Not only can the chatbot access this information, but it might be able to use it in unauthorized ways, such as creating target advertisements and phishing emails.

Unfortunately, is often hard to identity personal information from datasets and filter it out.

Writing Malware Code

- ChatGPT can be used to create malware code capable of hacking systems or executing cyber-attacks.

One of the biggest chatbot flaws is how easy it is for cybercriminals to exploit the AI. Even if safety measures have been implemented, chatbots can be tricked into writing malware code.

Before chatbots were so easily accessible, experienced computer programmers were the only ones capable of writing malware. Unfortunately, chatbots like ChatGPT make this illegal activity much more accessible to inexperienced hackers.

Even with the best safety measures in place, bad actors can still find ways to exploit the AI and code the chatbot to do things like write malware... #ChatGPT #ChatbotFlaws #HackerAbuse #AIsecurity #OnlineChatbotSafety Share on XPhishing

- Chatbots can be utilized to craft persuasive phishing emails or messages that could be used to gain unauthorized access to personal information or financial data.

Unfortunately, phishing attacks, the malicious activity in which fraudulent emails or messages are crafted in order to acquire confidential information, will likely increase as chatbot tools become more prevalent in our society.

Spammers can use chatbots to generate advanced phishing emails custom designed for each targeted individual. In the near future, being able to quickly identify phishing emails is going to get a lot harder.

The Biggest ChatGPT Flaws And How Cybercriminals Are Leveraging Online Chatbot Technology For Illegal Activity ... #ChatGPT #ChatbotFlaws #phishing #AIsecurity #AI Share on XSpam

- Language models like ChatGPT can be exploited to generate massive amounts of spam.

Be on the lookout for a huge increase in spam in both your email inbox and social media feeds.

ChatGPT is a powerful language model that could be used to generate massive quantities of spam content. This content could then be used for a variety of malicious purposes, such as flooding email inboxes and social media feeds with spam.

This could be incredibly damaging to both individuals and businesses, as it could overwhelm users with irrelevant content and overload IT infrastructure.

The Biggest ChatGPT Flaws And How Spammers Are Using Online Chatbots For Illegal Activities ... #ChatGPT #ChatbotFlaws #HackerAbuse #AIsecurity #OnlineChatbotSafety Share on XChatbot Spoofing

- How do you really know that you are chatting with an artificial intelligence?

This is a newer threat that not many people have considered yet. What if a human has infiltrated the chatbot that you are using? Just imagine the implications for a moment. How would you know if the information you are receiving is accurate or not? Would you be able to trust what the chatbot tells you, or would you be left questioning its validity? How would you be able to discern whether the chatbot is being truthful, or if it is being manipulated by an outside force?

Now, what if a government leader, like a president, is using a chatbot that is being controlled by another government? What misinformation could be fed to that leader? How could they be manipulated into making decisions that would benefit another nation?

Why You Should Never Trust Online Chatbots

Playing with new online chatbot technology, like ChatGPT, is exciting. However, it is important to be aware of the underlying issues and why chatbots should be treated more like toys than tools.

Chatbots are not perfect. Between being used for spreading bias and misinformation, to their potential participation in cyber crimes, there are a lot of ethical issues that need to be addressed.

As companies like Google, Microsoft, and OpenAI race to develop new chatbot technology advancements, they also need to focus on protecting AI tools from abuse and criminal activity.

It’s difficult to predict what future issues may arise with ChatGPT. But in the meantime, have fun playing with the Internet’s new toy. Just remember that it is also sometimes a biased liar that can be used for evil.

ChatGPT Flaws And Why You Should Never Completely Trust Online Chatbots ... #ChatGPT #ChatbotFlaws #HackerAbuse #AIsecurity #OnlineChatbotSafety #AI #Chatbots Share on X

Frank Wilson is a retired teacher with over 30 years of combined experience in the education, small business technology, and real estate business. He now blogs as a hobby and spends most days tinkering with old computers. Wilson is passionate about tech, enjoys fishing, and loves drinking beer.

Has The Dalai Lama Ever Seen The Movie Caddyshack?

Has The Dalai Lama Ever Seen The Movie Caddyshack?

Leave a Reply